AI infrastructure is forcing data centres to rethink how they manage heat in new ways. While rising power density is often the headline concern, the deeper challenge lies in how thermal behaviour has become dynamic, uneven and tightly coupled to workload patterns. As training and inference workloads fluctuate, heat no longer behaves as a steady by-product that can be managed with static margins.

For data centre operators and managers, this is driving a shift in perspective. Heat management is increasingly understood as a connected system that spans the entire facility, from how heat is generated and captured within the data hall to how it is transported, rejected or reused beyond it. Cooling is no longer a fixed layer beneath the IT stack. It is an adaptive capability that must evolve alongside workloads.

Why AI workloads break traditional assumptions

Conventional data centre designs assumed gradual change. Electrical loads increased predictably, airflow patterns remained stable, and cooling systems could be sized conservatively. AI disrupts that stability.

Modern AI workloads introduce sharp changes in utilisation. Power draw can rise and fall rapidly, often across mixed hardware estates with very different thermal characteristics. These electrical shifts translate immediately into thermal effects at the rack and room level. Without fast, coordinated cooling response, temperature instability follows.

This is why heat can no longer be treated as a downstream problem. Decisions about power delivery, rack density and workload placement now have immediate thermal implications, making closer alignment between IT and cooling essential.

Capturing heat where it is generated

As densities increase, the point at which heat is captured becomes more important. Traditional reliance on bulk airflow struggles to keep pace with high-density AI racks, where airflow requirements and fan energy consumption rise quickly.

Liquid cooling approaches address this by removing heat closer to the source. Direct-to-chip liquid cooling captures thermal energy at the processor level, reducing reliance on high-volume airflow and allowing tighter control under sustained load.

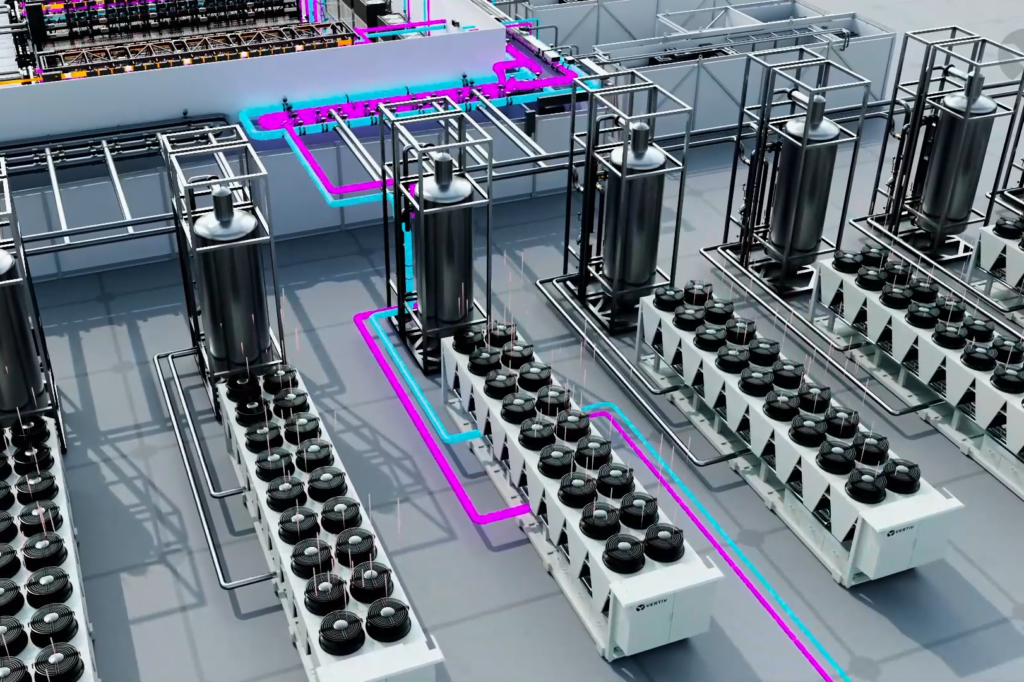

Recent expansions in the EMEA region have introduced new coolant distribution unit (CDU) models with capacities including 70 kW, 121 kW, 600 kW and even up to 2300 kW (2.3 MW). These are available in both in-rack and row-based configurations, supporting liquid-to-air and liquid-to-liquid cooling loops. This enables flexible deployments, whether for retrofitting existing facilities or building new greenfield data centres.

Effective cooling increasingly depends on viewing heat management as an end-to-end system. From capture at the rack and room level through to plant-side rejection or reuse, each stage of the thermal chain must work in concert

There’s also an emerging trend of backing up CDUs with uninterruptible power supply (UPS) systems to enable consistent cooling availability and maintain operational continuity during power disruptions.

Crucially, these technologies support incremental adoption. Many facilities are deploying mixed solutions with hybrid setups, where some racks transition to liquid cooling while others remain air-cooled. In this situation, air- and liquid-cooled racks could be directly next to each other or in adjacent rows, preserving flexibility as AI use cases evolve.

Designing the data hall for mixed density

Most data centres will operate hybrid systems for the foreseeable future. Supporting this requires careful attention to how heat is managed at the room level, not just at individual racks.

Intermediate solutions such as rear-door heat exchangers and row cooling help reduce the thermal burden on central systems by intercepting heat before it spreads through the space. At the same time, room-scale design choices play an increasingly important role.

Perimeter-based air-handling units and thermal wall technologies are being used to define airflow paths at the boundaries of the data hall, enabling more controlled heat collection across both raised and non-raised floor facilities. In mixed-density AI halls, these architectural elements help maintain predictable conditions as localised and liquid-based cooling strategies are layered in.

The result is greater stability and optionality, allowing operators to increase density selectively rather than committing to wholesale redesign.

Rethinking heat rejection strategies

Once waste heat is captured, it must be rejected or reused efficiently. Here too, AI is reshaping established approaches.

As facilities adopt higher operating temperatures, heat rejection strategies are diversifying. Trimming the cooling is emerging in data centres designed to operate at elevated water temperatures, enabling extended use of free cooling and reduced dependence on compressors. In these set ups, cooling becomes more closely aligned with real-world operating conditions rather than fixed design points providing flexibility everywhere to address unpredictable conditions.

At the same time, centrifugal chiller technologies continue to provide essential backbone capacity. Where reliable cooling performance is required regardless of ambient conditions, or where lower supply temperatures remain necessary, centrifugal systems offer stability and scalability under variable loads. This enables maximum cooling efficiency, allowing more power for the AI load.

Rather than representing competing philosophies, these approaches reflect different stages of a facility’s thermal journey and different operational priorities.

Control as the unifying layer

What enables this diversity of cooling strategies to function as a coherent system is control. Sensors, analytics and automation increasingly link IT load, airflow management and plant operation in real time.

Modern control platforms coordinate behaviour across the thermal chain, adjusting setpoints, flow rates and cooling capacity in response to changing workloads. This reduces unnecessary energy use, improves resilience and provides the operational insight needed to plan future expansion.

For operators, this visibility also supports better decision-making. Understanding how heat moves through the facility makes it easier to evaluate new technologies, validate design assumptions and manage risk as AI deployments scale. When paired with a comprehensive services contract which includes end-to-end support across the entire thermal chain – from initial design and commissioning through to ongoing optimisation – continuous reliability can be achieved through expert deployment and proactive maintenance.

Heat as a strategic consideration

As AI continues to reshape digital infrastructure, heat management is becoming a strategic element rather than a purely technical one. Effective cooling increasingly depends on viewing heat management as an end-to-end system. From capture at the rack and room level through to plant-side rejection or reuse, each stage of the thermal chain must work in concert. Facilities that can adapt dynamically, preserve flexibility and align cooling behaviour with workload reality will be better positioned to support AI growth efficiently.

The shift towards thinking in terms of the thermal chain reflects this change. It reframes cooling as an active enabler of performance and scalability, rather than a background utility. For data centres navigating the demands of AI, how heat is managed may increasingly define what is possible.

Enrico Miconi

Enrico Miconi is Senior Manager Product Management, Global Chilled Water Systems at Vertiv